Semantic Sound Synthesis with Agents

April 2024

In March last year, with my long-time collaborator Barney Hill, I released Vroom VST ⸺ a text-to-sound plugin created for producers. It leveraged the at-the-time cutting edge AI model AudioLDM. However, after getting it in the hands of musicians, it became obvious that text-to-sound is limited in application; labeled sound samples already exist in abundance with companies like Splice providing access to over 400 million for a monthly subscription.

After chatting with educators like Tim at You Suck At Producing , we decided that we needed a tool that guides and teaches you to shape sound using the wide variety of traditional instruments and effects that already exist rather than the raw generation of sound from scratch. We knew we wanted it to integrate with Ableton Live but to an extent we were in limbo, until Barney found Daniel Jones' AbletonOSC library that lets you read and write to Ableton's internal state via the OSC network protocol.

An early prototype of Vroom Live .

“make my synth brighter” opens up the filter on Ableton's Analog synth.

Our design was to make an agent that presents a familiar chat interface to the user with the thesis was that we could build on top of a rich history of synth interface design and the wide training set that LLMs have. This includes extensive discussion on forums like ModWiggler , where users have long debates about the differences and uses of parameters and controls on every type of instrument.

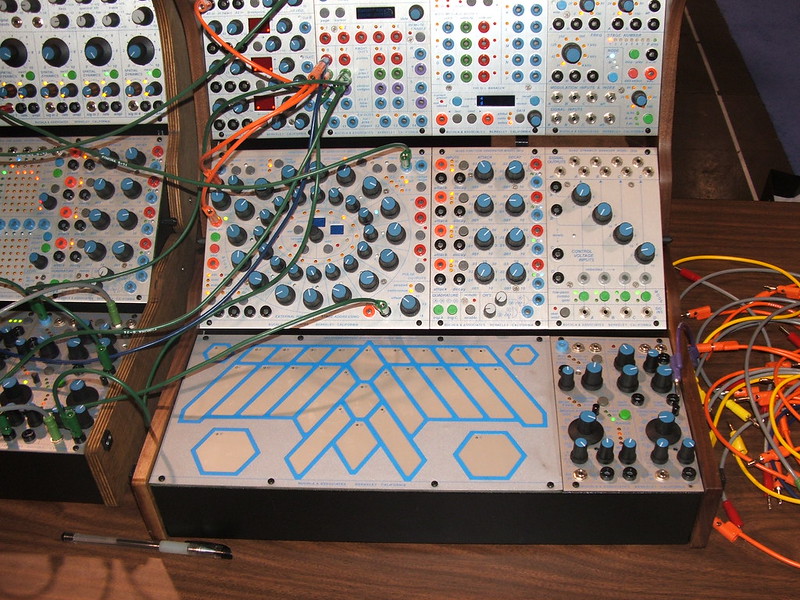

As early as the 1960s, pioneers like Don Buchla made synthesizers that have natural and unique interfaces with fuzzy controls like tambre or novel, ambiguous graphics that serve to defocus the underlying eletronics and privilege human intention. This radical idea, that an interface should be symbolic and intuative at the expense of accuracy, is commonplace in software engineering ⸺ we just call it abstraction.

A Buchla modular synthesizer at NAMM 2007 posted by the Synthesizers flickr user. Authentic to the original 1960s/1970s designs.

After a weekend of hacking, Vroom Live was born. Under the hood, it uses OpenAI's function calling although it could be ported to work with any available language model. It particularly shines when you have descriptive samples and effects so it can infer what genre or style of music you're working on. It also works great with virtual recreations of traditional synthesizers; it is perfect for translating the broadest of sonic wishes to their interfaces.

Are you interested in using Vroom Live or working with us? Send us an email .

Vroom Live ⸺ watch the 'amount' control on the multiband compressor.